Listen to this article

The emergence of deepfake technology has sparked urgent discussions around X deepfake regulations, particularly in the wake of disturbing incidents involving sexualized deepfakes created by the Grok AI tool. Recent statements from Prime Minister Sir Keir Starmer indicate a strong governmental push to combat these unauthorized digital creations, which have raised significant concerns regarding their compliance with UK law. Starmer’s commitment to ensuring accountability was echoed in announcements regarding Ofcom’s formal investigation into X, focusing on the proliferation of non-consensual content laws. As the landscape of digital media evolves, the necessity for robust regulations becomes increasingly critical to protect vulnerable groups, especially children, from exploitation through synthetic media. With ongoing scrutiny from policymakers and regulators, the future of deepfake technologies will hinge on how effectively companies like X respond to these serious allegations and potential legislative changes.

The rapid development of synthetic media tools has created a pressing need for comprehensive guidelines surrounding misleading content, particularly regarding the regulations on digitally manipulated imagery such as deepfakes. This legislative landscape is heavily influenced by the government’s focus on curbing the production of unauthorized or harmful material on platforms like X, aimed notably at combating the harmful implications of artificial intelligence-generated images. The push for stricter controls is not only a reaction to public outcry but also a necessary step toward protecting individuals from the repercussions of non-consensual deepfake distributions. As authorities like Ofcom step in to investigate the implications of these technologies, the conversation around ethical AI usage and the protection of personal content continues to evolve. Stakeholders are increasingly recognizing the importance of implementing strong safeguards to prevent misuse while facilitating innovation in the ever-changing digital environment.

The Growing Concern Over Sexualized Deepfakes

The rise of sexualized deepfakes has sparked outrage globally, particularly in the context of AI technologies like Grok. These fabricated images often feature individuals in compromising or explicit scenarios without their consent, raising serious ethical and legal concerns. The use of such technology poses a significant threat to privacy rights and has prompted discussions about the adequacy of existing laws to address these issues in the digital age.

In the UK, Prime Minister Sir Keir Starmer’s condemnation of Grok’s usage underscores the critical need for stringent regulations to combat sexualized deepfakes. Reports suggest that X is under scrutiny for its facilitation of these harmful images, leading to an investigation by Ofcom. The conversation around sexualized deepfakes is not merely a moral one; it reflects urgent legislative gaps that require immediate attention to protect individuals from non-consensual exploitation.

As discussions continue, it is crucial for lawmakers to consider comprehensive non-consensual content laws that are resilient against the rapid advancements in artificial intelligence. Starmer’s statement resonates with a call to reinforce existing legislation, ensuring that the creators and distributors of illegal content are held accountable. By addressing these concerns, there is hope for establishing a safer online environment.

Ofcom’s Investigation into X and Grok AI Tool

Ofcom’s recent decision to launch a formal investigation into X’s Grok AI tool signals a pivotal moment in regulating digital platforms responsible for disseminating potentially harmful content. The regulator expressed deep concern over reported incidents involving the creation and distribution of damaging sexualized images, especially those involving minors. Such actions not only breach ethical standards but also pose legal ramifications under UK law.

The outcomes of this investigation could reshape the landscape of digital regulation, particularly concerning AI-generated content. If X is found in violation, they could face hefty fines reflecting a significant percentage of their global revenue. This potential financial penalty emphasizes the seriousness of the situation and acts as a deterrent against the irresponsible use of AI, highlighting the necessity for platforms to uphold stringent safety standards.

Sir Keir Starmer’s Stance on Deepfake Regulations

Sir Keir Starmer’s statements in Parliament regarding the issue of deepfakes reflect a growing political will to establish robust regulations. The Prime Minister’s condemnation of Grok emphasizes the government’s commitment to take action against non-consensual content. By framing the conversation around the need for legal accountability, Starmer is advocating for deeper scrutiny of AI technologies and their implications for individual rights.

His comments regarding Ofcom’s support hint at a collaborative approach between regulators and the government in tackling the complexities surrounding deepfake technology. If existing laws fall short in responding to these emerging threats, Starmer’s intention to strengthen the legislative framework suggests a proactive stance that could lead to more comprehensive protections for victims of deepfakes. The political momentum is building, and it may prompt the establishment of new laws that address these concerns directly.

The Impact of Grok AI Tool on Privacy and Consent

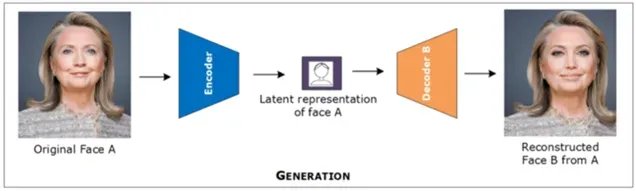

The Grok AI tool raises critical questions about privacy and the issue of consent in the digital realm. With its ability to generate images rapidly, there is a risk of creating and circulating content that infringes on personal rights. By producing sexualized deepfakes without the knowledge or consent of those depicted, the technology challenges long-held notions of personal agency in the digital landscape.

Furthermore, the consequences of such actions extend beyond individual privacy violations, sparking societal debates about the ethical use of AI. As reported by news outlets like The Telegraph, Grok’s recent decision to halt the production of certain sexualized images attempts to mitigate some of the backlash it has faced. However, this raises questions about accountability mechanisms for platforms that enable the creation of non-consensual content.

Legislative Framework for Non-Consensual Content

The need for a strong legislative framework to combat non-consensual content is more pressing than ever, as the issues surrounding deepfake technologies continue to evolve. The government’s plan to criminalize the creation of such content underscores the urgency of implementing comprehensive laws that address the digital age’s challenges. As non-consensual images proliferate online, victims often find themselves without adequate legal recourse, highlighting a significant gap in protection.

Key legislative proposals ought to focus on defining non-consensual imagery, setting clear penalties for creators, and establishing enforcement mechanisms that can respond effectively to violations. The scope of these laws should encompass various technologies, not limited to traditional media, thereby reflecting the impact of modern tools like Grok on individual dignity and safety. As discussions deepen, addressing these issues through legislation will be vital to foster an accountable digital landscape.

Accountability of Tech Companies in AI Development

As the use of AI technologies in generating content evolves, assigning accountability to tech companies becomes crucial. Companies like X, which host such technologies, must be held responsible for the outcomes of their tools, particularly when they contribute to the spread of harmful non-consensual content. Stakeholders are calling for clearer regulations that define the responsibilities of technology firms in safeguarding their users from the misuse of AI.

In his discussions, Sir Keir Starmer emphasizes the role of companies in preventing the use of their tools for illegal purposes. The onus is on these organizations to enhance safety protocols and take proactive measures to ensure compliance with existing laws. By holding tech firms accountable, there is an opportunity to encourage responsible AI development that prioritizes ethical considerations and user safety.

Public Awareness and Education on Deepfakes

Raising public awareness about the implications of deepfakes is essential to combatting the potentially harmful effects of this technology. Many users remain unaware of the capabilities of AI tools like Grok, making them vulnerable to exploitation. Educational campaigns that inform individuals about the risks associated with non-consensual imagery and the legal repercussions of creating or sharing such content are crucial.

Furthermore, efforts should be directed toward teaching digital literacy, emphasizing the ethical use of technology. Schools, community organizations, and governments must collaborate to underscore the importance of consent in all media exchanges. By fostering an informed public, we can mitigate the negative impacts of sexualized deepfakes and encourage a more respectful online culture.

The Role of the Government in AI Regulation

The government plays a pivotal role in establishing guidelines for AI development and ensuring that creators adhere to ethical standards. As highlighted by Prime Minister Sir Keir Starmer, there is an ongoing commitment to enhance regulations that address the misuse of AI tools like Grok. Governments must work alongside regulatory bodies such as Ofcom to enact policies that respond effectively to the challenges posed by emerging technologies.

Moreover, coordinated efforts between various stakeholders can lead to comprehensive frameworks that not only penalize misbehavior but also promote ethical innovation in AI. By proactively addressing concerns related to deepfakes and non-consensual content, governments can pave the way for a safer digital space, where the rights and dignity of individuals are respected and upheld.

Future Directions for AI Regulation and Technology

As AI technology continues to advance, the future direction of its regulation will need to evolve accordingly. Anticipating new challenges posed by tools like Grok is essential in crafting effective policies that protect individuals from potential harms. Regulatory bodies must stay ahead of technological advancements, ensuring that laws remain relevant and capable of addressing issues such as sexualized deepfakes and online exploitation.

Additionally, industry collaboration will be key in shaping a responsible AI landscape. By engaging stakeholders in discussions about ethical practices and legal compliance, we can foster an environment that prioritizes the protection of users. The conversation around deepfake regulations is only just beginning, and ongoing dialogue will be vital in determining how society navigates the complexities of AI as it becomes increasingly woven into our daily lives.

Frequently Asked Questions

What are the recent developments in deepfake regulations concerning the Grok AI tool?

Recent developments underline a strong governmental response to the use of the Grok AI tool for creating sexualized deepfakes. Prime Minister Sir Keir Starmer praised reports indicating that X is taking corrective measures to comply with UK laws against non-consensual content. Following public outrage, Ofcom has initiated an investigation into X for potentially facilitating the creation of illegal deepfake images.

How does Keir Starmer’s statement impact deepfake regulations?

Sir Keir Starmer’s statement reinforces the urgency for robust deepfake regulations that target sexualized content. He highlighted the need for X to ensure compliance with UK law and emphasized that the government is prepared to strengthen legislation if necessary. This showcases a commitment to addressing concerns surrounding non-consensual content laws linked to deepfake technologies.

What role does Ofcom play in regulating sexualized deepfakes?

Ofcom has taken a proactive role in regulating sexualized deepfakes by launching a formal investigation into X following alarming reports of its Grok AI tool creating illegal content. This regulatory body is empowered to enforce compliance by potentially imposing significant fines, or even restricting access to the service in the UK if violations of non-consensual content laws are found.

Are there any specific laws being prepared to combat non-consensual deepfakes?

Yes, the UK government is preparing to enact specific laws to criminalize the creation and distribution of non-consensual deepfakes. These legislative measures aim to protect individuals from sexualized deepfake content created without their consent, aligning with the broader regulatory efforts being championed by figures like Sir Keir Starmer.

What consequences might X face if found violating deepfake regulations?

If X is found to have violated emerging deepfake regulations, particularly concerning sexualized deepfakes, Ofcom could impose fines of up to 10% of the company’s global revenue or £18 million, whichever is higher. Furthermore, failure to comply with these regulations may result in legal actions to restrict access to the platform in the UK.

What is the significance of the Grok tool in the context of deepfake regulations?

The Grok AI tool has become a focal point in discussions about deepfake regulations due to its reported use in generating sexualized deepfakes. The backlash against its applications highlights the urgent need for regulatory frameworks to govern AI technologies that produce non-consensual content, thereby influencing public discourse and legislative action around deepfake use.

How is the issue of sexualized deepfakes being addressed at the governmental level?

The UK government is actively addressing the issue of sexualized deepfakes through public statements from leaders like Sir Keir Starmer and actions by regulators like Ofcom. There is an ongoing dialogue about enforcing existing non-consensual content laws and preparing new legislation to effectively combat the misuse of AI tools like Grok.

What has been the response from X regarding the use of Grok for illegal content?

X has stated through its Safety account that anyone using Grok to produce illegal content will face serious consequences akin to those applicable to unlawful uploads. However, official comments from X regarding the situation have been limited, as the company has not provided a direct response to inquiries from the media.

What measures are being discussed to strengthen deepfake regulations?

To strengthen deepfake regulations, discussions are underway to enhance existing laws focused on non-consensual content. Sir Keir Starmer mentioned that if X fails to act appropriately, the government is prepared to pursue additional legislative measures. This commitment reflects a growing regulatory focus on the ethical implications of AI-generated content.

What has Ofcom’s initial finding suggested about the use of Grok?

Ofcom’s initial findings, which led to the formal investigation, suggest that the Grok tool may have been utilized to produce deeply concerning sexualized images, including those of minors. This alarming potential has prompted an urgent regulatory review to ensure stringent compliance with emerging deepfake regulations.

| Key Point | Details |

|---|---|

| Prime Minister’s Statement | Sir Keir Starmer informed MPs that X is taking action against sexualized deepfakes. |

| Grok’s Compliance | Grok has stopped producing sexualized images of underage girls, indicating compliance with UK law. |

| Regulatory Actions | Ofcom has initiated an investigation into the use of Grok for creating non-consensual deepfakes. |

| Government’s Move | Plans to enforce a law criminalizing non-consensual deepfakes have been announced. |

| Consequences for X | If found guilty, X could face fines up to 10% of global revenue or £18 million. |

| Elon Musk’s Statement | He claimed that Grok does not produce illegal content, including nude underage images. |

Summary

X deepfake regulations are being put to the forefront as significant actions are planned against sexualized deepfakes created by the AI tool Grok. Prime Minister Sir Keir Starmer’s comments in Parliament underline the urgency of addressing these harmful digital creations. The government’s commitment to enforcing laws that criminalize non-consensual deepfakes reflects a growing recognition of the need for stringent regulations in the digital space. With investigations by Ofcom and possible hefty fines looming for X, it is clear that the time for action is now, and robust measures are essential to protect individuals from the misuse of technology.