Listen to this article

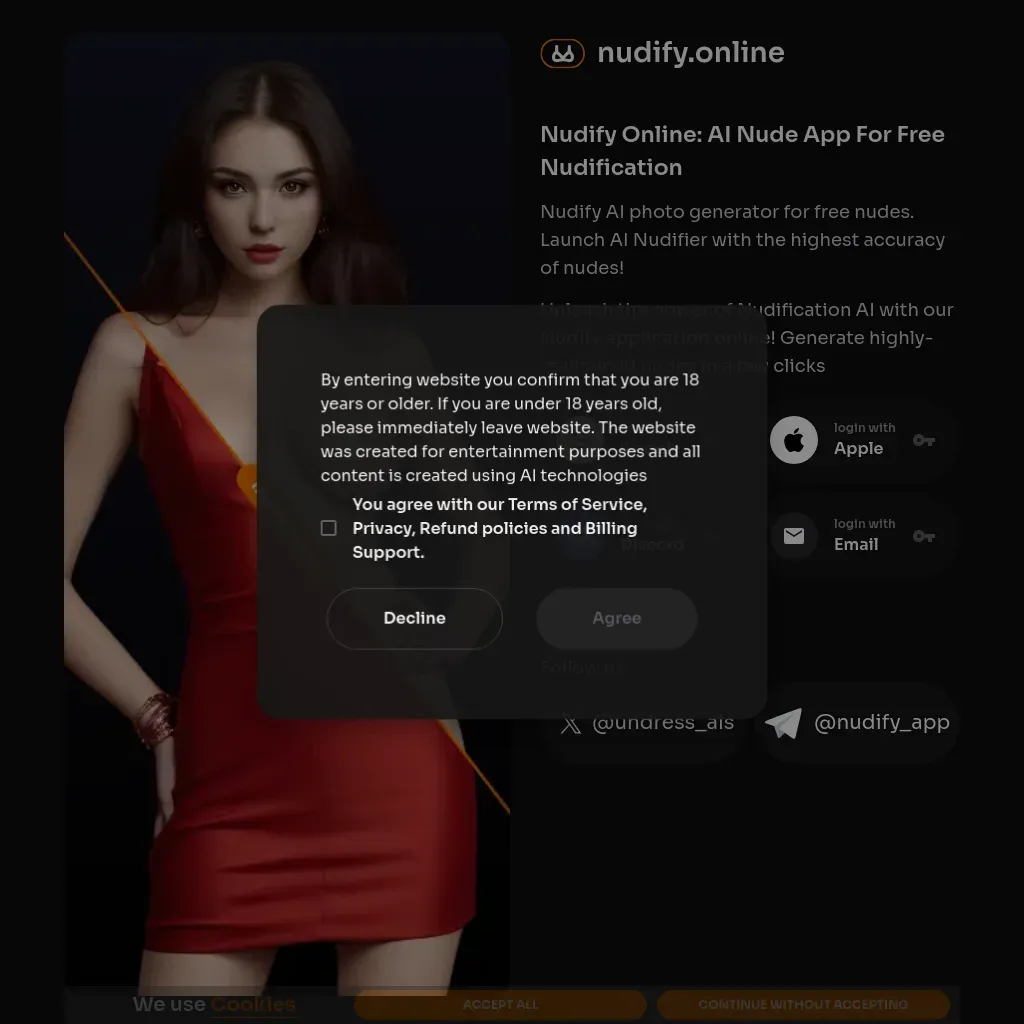

Nudification apps are at the forefront of a heated debate as the UK government has moved to ban these controversial tools designed to manipulate images and videos. This decision is part of a significant strategy aimed at combating online misogyny and reducing violence against women. The newly proposed laws stipulate that it will be illegal to create or distribute AI applications that digitally undress individuals without their consent, clearly addressing the crisis of non-consensual imagery. According to Technology Secretary Liz Kendall, such technologies have been weaponized to exploit women and girls, who deserve safety both online and offline. With ongoing concerns over AI deepfake regulation, the government’s actions signal a decisive commitment to safeguarding individuals against the misuse of technology in the pursuit of justice against digital abuse.

The recent initiatives to eliminate “de-clothing” applications underscore a growing recognition of the harmful implications of image manipulation technologies. These advanced digital tools, which allow users to generate realistic representations of individuals devoid of clothing, have sparked outrage and underscore the broader issue of online gender-based violence. As authorities seek to tighten regulations around sexually explicit deepfakes and protect vulnerable populations, it’s clear that the fight against non-consensual content is becoming more urgent. Campaigns advocating for stronger protections emphasize a need for comprehensive strategies that encompass not just legislation, but also a collaborative effort with technology firms to thwart such abuses. As the conversation around AI in digital spaces continues, the importance of establishing ethical and legal frameworks to govern these tools becomes increasingly vital.

Understanding the Impact of Nudification Apps on Online Safety

Nudification apps pose a significant threat to online safety, particularly for women and girls. These applications exploit generative AI technology to manipulate images, creating false representations of individuals stripped of their clothing. This not only violates personal privacy but also promotes a culture of online misogyny that fuels further violence against women. The recent announcement by the UK government to ban these nudification apps reflects a growing recognition of the need for stronger regulations to protect individuals from the ramifications of non-consensual imagery.

The harms inflicted by these apps extend beyond mere embarrassment; they can lead to long-term psychological consequences for victims. Experts argue that the proliferation of fake nude images can intimidate and control women online, undermining their safety and wellbeing. As authorities seek to combat these threats, it is clear that prohibiting such technologies is an essential step in the broader fight against gender-based violence and abuse in the digital sphere.

Frequently Asked Questions

What are nudification apps and why are they being banned by the UK government?

Nudification apps utilize AI technology to create realistic images that make it seem like someone is nude without their consent. The UK government is banning these apps to combat online misogyny and reduce violence against women, as they contribute to non-consensual imagery and exploit individuals without their permission.

How do nudification apps contribute to online misogyny?

Nudification apps contribute to online misogyny by facilitating the creation of non-consensual explicit images that humiliate and exploit women. These apps weaponize technology against individuals, fostering an environment where violence against women is normalized through digital abuse.

What is the government’s stance on AI deepfake regulation related to nudification apps?

The UK government aims to enhance AI deepfake regulation as part of its response to the risks posed by nudification apps. New laws will make it illegal to create or distribute these apps, ensuring that those involved in their production face legal consequences under the Online Safety Act.

What measures is the UK government taking to prevent non-consensual imagery?

The UK government is implementing new laws to ban nudification apps and is collaborating with tech companies to develop AI technologies that can identify and block non-consensual imagery. This initiative is part of a broader strategy to reduce violence against women and protect vulnerable groups.

Why are nudification apps considered harmful, especially for children?

Nudification apps are particularly harmful because they can be used to manipulate images of minors, leading to the creation of child sexual abuse materials (CSAM). This poses serious threats to the safety and well-being of children, prompting calls for a comprehensive ban on such technologies.

How are UK safety tech initiatives helping combat the issue of nudification apps?

UK safety tech initiatives, such as partnerships with companies like SafeToNet, are focused on developing AI tools to detect and block sexual content, including from nudification apps. These efforts aim to prevent the distribution of non-consensual imagery and protect individuals from online exploitation.

What role do child protection organizations play in the movement against nudification apps?

Child protection organizations, such as the Internet Watch Foundation, advocate for stricter regulations on nudification apps. They emphasize the need for comprehensive measures to prevent the manipulation of explicit images, thus contributing to the government’s efforts to combat online harm.

What penalties are being proposed for those who create or distribute nudification apps?

The UK government proposes that individuals who create or distribute nudification apps will face serious legal repercussions, ensuring that anyone profiting from or facilitating these technologies will be held accountable under the new laws.

| Key Point | Details |

|---|---|

| New Legislation | The UK government plans to ban nudification apps to combat online misogyny. |

| Purpose of Ban | The ban is part of a strategy to reduce violence against women and girls by half. |

| Current Legal Framework | Creating explicit deepfake images without consent is already illegal under the Online Safety Act. |

| Government Statement | Technology Secretary Liz Kendall emphasized the need for online safety for women and girls. |

| Collaboration with Tech Companies | The government plans to work with tech firms like SafeToNet to enhance protections against image abuse. |

| Child Protection Concerns | Experts warn that nudification apps can lead to the creation of harmful fake imagery. |

| Call for Action | Child protection organizations are urging for stronger measures against such technologies. |

| NSPCC’s Position | The NSPCC welcomes the ban but calls for device-level protections against CSAM. |

Summary

Nudification apps will soon be banned in the UK as a significant step towards protecting women and girls from online abuse. The government’s decision reflects a commitment to reducing online misogyny and supports existing laws against non-consensual imagery. Collaborations with technology companies, along with recent calls from child protection organizations, highlight the urgency of addressing the risks posed by these apps. This new law aims to create a safer online environment, hopeful for a future where technology does not contribute to exploitation.