Listen to this article

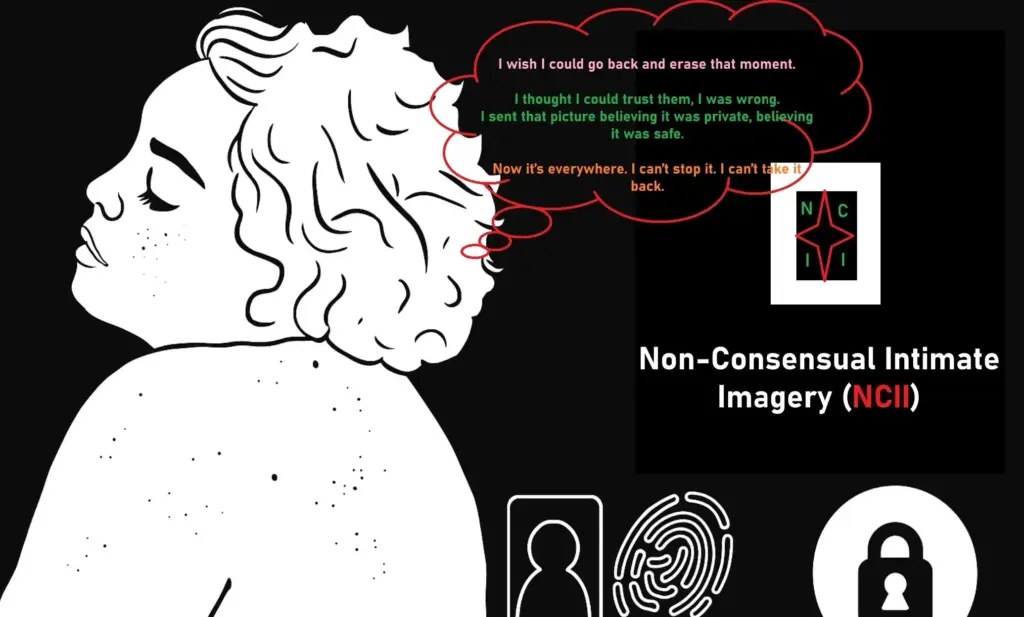

Non-consensual intimate images have emerged as a critical issue within today’s digital landscape, raising profound concerns about privacy and exploitation. The UK government is taking a firm stance against this growing problem by enacting legislation that criminalizes the creation and distribution of such images. In light of Elon Musk’s controversial Grok AI chatbot and its potential to generate harmful content, Technology Secretary Liz Kendall emphasized that these AI-generated images are not merely harmless artifacts but tools of abuse. The impending changes will not only hold individuals accountable but will also target platforms like X that enable the proliferation of non-consensual intimate images. By addressing this issue seriously under the Online Safety Act, the government aims to foster safer online environments for all users, particularly women and children.

The alarming phenomenon of digital exploitation through unauthorized intimate imagery, often referred to as image-based abuse or revenge porn, poses significant threats to personal safety and dignity. As public outcry grows, recent legislative efforts, spearheaded by leaders like Liz Kendall, aim to curtail the ability of platforms like X to host or facilitate these invasive practices. With the rise of deepfake technology, particularly involving AI-generated visuals, the need for stringent regulations becomes even more pressing. Moreover, the Online Safety Act reflects a broader commitment to hold both perpetrators and media platforms accountable for the widespread distribution of such illicit content. This increasingly urgent conversation around online ethics underscores the necessity for comprehensive protection against the misuse of technology.

The Online Safety Act and Its Role in Combating Non-Consensual Intimate Images

The Online Safety Act is a pivotal piece of legislation in the UK aimed at enhancing protections against various forms of digital abuse, including non-consensual intimate images. With the government, led by Technology Secretary Liz Kendall, setting a clear directive to outlaw the creation and distribution of these types of images, it is becoming increasingly vital for individuals to understand the implications of this law. The act will not only criminalize the act of sharing such intimate images without consent but will also hold digital platforms accountable for the content shared on their sites, fundamentally reshaping the landscape of online safety.

Kendall’s firm stance highlights the gravity of sharing intimate images without consent, and emphasizes that this behavior is not just a personal violation, but a serious crime under the Online Safety Act. By stipulating that platforms like X must take responsibility for the content they host, the act pushes for a greater accountability that targets the systemic issues surrounding digital abuse. As such, the connection between the Online Safety Act and ongoing efforts to combat the creation and dissemination of AI-generated intimate images is crucial for advocating for safer online environments for women and children.

The Impact of AI-Generated Images on Society

The rise of AI-generated images has raised significant ethical concerns, especially regarding their use in producing non-consensual intimate images. These technologies, when misused, can become digital weapons that violate personal autonomy and dignity. As the UK government prepares to enforce stricter regulations against such abuses, it becomes essential to recognize the broader social implications. Not only do these AI-generated images exploit and objectify individuals, but they can also perpetuate harmful stereotypes and contribute to a culture of violence against women.

Furthermore, the advent of AI technologies like Elon Musk’s Grok chatbot emphasizes the urgent requirement for legal frameworks such as deepfake legislation, which aims to confront these modern-day challenges. The partnership of AI technology with irresponsible use could exacerbate existing societal issues, making it crucial for regulatory bodies to intervene. Laws addressing the creation and manipulation of non-consensual intimate images must evolve alongside technological advancements to safeguard rights and dignity in the digital age.

The Legal Framework Against Non-Consensual Intimate Images

The legal framework governing non-consensual intimate images has recently strengthened with the government’s commitment to prioritize this offense under existing laws, including the Online Safety Act and the Data (Use and Access) Act. As Liz Kendall announced, the government’s approach is not just reactionary but proactive; it aims to tackle the root causes by preventing companies from providing the tools necessary for creating such abusive content. This intent to curb the unauthorized distribution of intimate images reflects a societal shift towards increased accountability for both individuals and platforms.

Kendall’s emphasis on accountability underscores a critical aspect of the legal landscape—the notion that technology platforms must play an active role in mitigating digital harm. The obligation of companies like X to monitor and remove illegal content from their platforms is a significant step towards curtailing the spread of non-consensual intimate images. This legal obligation serves not only to protect individuals but also to foster a safer online environment, deterring potential offenders from engaging in such harmful activities.

The Responsibilities of Technology Platforms in Preventing Digital Abuse

The responsibility of technology platforms like X in combating digital abuse, particularly the distribution of non-consensual intimate images, has come under scrutiny as government officials call for stricter regulations. Liz Kendall has underscored that the culpability of platforms extends beyond mere compliance; they must actively implement measures to safeguard users. This expectation aligns with the broader mission of the Online Safety Act to minimize harm online and promote a safer internet experience.

Implementing accountability mechanisms ensures that platforms cannot simply sidestep their ethical obligations to protect users from digital exploitation. The measures described by Kendall indicate a paradigm shift towards demanding proactive engagement from technology companies, rather than allowing them to remain passive observers. Such actions are increasingly necessary, especially as cases of AI-generated images exploit individuals without their consent, highlighting the urgent need for platforms to take significant responsibility in maintaining a safe digital sphere.

The Importance of Timely Enforcement in Digital Legislation

Timely enforcement of digital legislation is crucial in addressing the issue of non-consensual intimate images and other forms of digital abuse. Liz Kendall’s insistence on a swift timeline for investigations reflects an acknowledgment that delays can perpetuate further harm to victims. The proactive approach of implementing immediate legal measures underscores the urgency with which the government views these offenses and the potential risks posed by AI technologies such as Grok.

With calls for rapid action against platforms failing to monitor illegal content, the implications for offenders are clear: the government is prepared to take stringent actions to enforce compliance. This urgency not only serves to reassure the public but also reinforces the notion that digital safety cannot be an afterthought; it must be prioritized to adapt to the fast-paced evolution of technology and the associated challenges in maintaining safety across online environments.

Public Response to Government Action Against Non-Consensual Images

The announcement of new measures to combat non-consensual intimate images has sparked a significant response from the public and activist communities alike. Many individuals are eager to see the government take a firmer stance against the exploitation of vulnerable groups, particularly women and children. Activist forces have long criticized governmental delays in addressing these pressing concerns, and Kendall’s latest comments have mobilized further support for rapid action, stimulating discussions around accountability for both individuals and platforms.

Public sentiment reflects both support and skepticism; while many applaud the government’s initiative to tackle these injustices, others question whether current resources, specifically within law enforcement, can adequately support the execution of these ambitious plans. The available manpower and technological infrastructure to enforce new laws effectively will be integral in determining the success of these efforts in combating the dissemination of non-consensual images.

Potential Consequences for Infringing Companies

The ramifications for companies that fail to comply with the regulations regarding non-consensual intimate images are substantial. Under the scrutiny of organizations such as Ofcom, platforms that enable the spread of illegal content can face hefty fines and may even be subjected to court orders compelling them to modify their practices or risk being blocked entirely from access in the UK. This potential for severe economic penalties is an important deterrent for companies and may compel them to take the necessary steps to prevent abuse on their platforms.

Moreover, the commitments outlined by the UK government not only highlight the financial stakes but also the reputational risks that could accompany legal violations. If a platform is found to consistently harbor abusive content, it risks losing public trust and credibility. Therefore, it is in the best interest of companies like X and others to proactively adopt preventive measures, ensuring they contribute to a safer online community while mitigating potential legal repercussions from unethical practices.

Activism and Advocacy in the Digital Age

In recent years, activism against the misuse of digital platforms has gained tremendous momentum, pushing for significant reforms in the legislation surrounding non-consensual intimate images. Advocacy groups and concerned citizens have taken to social media, engaging in campaigns aimed at raising awareness about the harm caused by AI-generated content and the urgent need for legal protections. With leaders like Liz Kendall advocating for tangible changes, these efforts have been further amplified, creating a synergistic movement pushing against digital exploitation.

Activists emphasize that the fight against non-consensual images must not only focus on punitive measures but also encompass educational initiatives that inform users about their rights and the potential dangers of certain technologies. As the government embarks on enforcing new laws, it becomes increasingly essential for advocacy groups to collaborate with lawmakers to ensure that comprehensive strategies are in place, rendering support systems accessible to victims and helping cultivate a culture of online respect and safety.

Navigating the Future: AI Technologies and Ethical Considerations

The interplay between AI technologies and ethical practices has emerged as a critical issue in contemporary discussions surrounding non-consensual intimate images. As platforms utilize advanced AI tools for content generation, the potential for misuse becomes alarmingly evident. Governments and technology experts must work in tandem to establish ethical guidelines that govern AI behavior, particularly for functionalities that can lead to creating exploitive images without consent. This proactive stance not only serves to protect individuals but also to enhance trust in AI technologies as they continue to evolve.

Emerging regulations must adapt to new ideologies surrounding consent and representation in the digital landscape. By prioritizing education and ethical standards within technological development, society can harness the benefits of AI while minimizing the associated risks inherent in unregulated use. As non-consensual intimate images become an international concern, aligning technological advancements with robust ethical frameworks is essential in securing a safer digital future.

Frequently Asked Questions

What are non-consensual intimate images and why are they a concern?

Non-consensual intimate images refer to explicit photos or videos that are shared or created without the consent of the individuals depicted. They are a major concern due to their role in harassment, exploitation, and abuse, particularly against women and children. Recent discussions surrounding AI-generated images have highlighted the potential for misuse, prompting legal actions like the UK’s forthcoming legislation under the Online Safety Act to combat such offenses.

How does the Online Safety Act address non-consensual intimate images?

The Online Safety Act criminalizes the sharing of intimate images without consent, categorizing it as an offense for both individuals and platforms. This legislation aims to hold accountable those who create, share, or threaten to share non-consensual intimate images, including AI-generated content, thereby enhancing digital safety for users.

What measures are being taken against platforms like X regarding non-consensual intimate images?

The UK government is actively investigating X for not adequately addressing the issue of non-consensual intimate images, particularly those altered using Grok AI. If found in breach of legislation, X could face significant fines and may be compelled to take immediate action to remove illegal content and improve user protections against such abuses.

What repercussions do individuals face for creating non-consensual intimate images using AI technology?

Individuals attempting to create or share non-consensual intimate images, including those generated using AI tools like Grok, will face legal consequences under the newly implemented laws. This includes potential criminal charges as the government seeks to enforce stringent penalties for violations of the Online Safety Act.

What steps should technology companies take to prevent non-consensual intimate images from being created or distributed?

Technology companies are expected to implement measures outlined by Ofcom, such as enhancing moderation protocols and restricting access to tools that facilitate the creation of non-consensual intimate images. Companies like X must take proactive steps to comply with regulations to protect users and avoid legal penalties.

What is the role of AI-generated images in the discussion of non-consensual intimate content?

AI-generated images play a significant role in discussions about non-consensual intimate images, as they can be created easily and without consent, raising ethical and legal concerns. The UK government’s focus on legislation against non-consensual images reflects the urgency to address the misuse of AI technologies in creating harmful content.

How can the public report non-consensual intimate images they encounter online?

Individuals who encounter non-consensual intimate images online should report them directly to the platform hosting the content. Additionally, they can contact law enforcement or support organizations dedicated to tackling online abuse, ensuring a swift response to such violations of privacy.

What does the recent investigation by Ofcom into X entail regarding non-consensual intimate images?

Ofcom’s investigation into X is focused on determining whether the platform has adequately removed non-consensual intimate images and taken necessary measures to prevent such content from being shared. The outcome could lead to significant legal repercussions for X, depending on their compliance with the law.

What has been the public response to the government’s actions against non-consensual intimate images?

The public response has been largely supportive of the government’s actions against non-consensual intimate images, especially in light of increased awareness about online harassment. Advocacy groups have urged for faster implementation of the laws to protect potential victims of abuse and exploitation.

What is the significance of the term ‘deepfake legislation’ in relation to non-consensual intimate images?

Deepfake legislation is significant as it directly addresses the creation of altered content, including non-consensual intimate images, using AI technology. As deepfakes can be used to produce harmful, unauthorized depictions of individuals, establishing legal frameworks around this technology is vital for preventing abuse and protecting personal privacy.

| Key Point | Details |

|---|---|

| New Legislation | UK will make it illegal to create non-consensual intimate images. |

| Government’s Standpoint | Technology Secretary Liz Kendall condemned AI-generated intimate images as ‘weapons of abuse’. |

| Investigation by Ofcom | Ofcom is investigating X for possibly altering images and may fine the platform. |

| Enforcement Delay | Despite the law being passed in 2025, the enforcement has been delayed until now. |

| Responsibility of Platforms | Platforms that host such images, like X, must be held accountable. |

| Activist Concerns | Criticism of the UK government for delay in implementing protections. |

| Global Backlash | Other countries like Malaysia and Indonesia have banned such AI tools temporarily. |

Summary

Non-consensual intimate images have become a focal point of legal reform in the UK, as new legislation aims to curb their creation and distribution. This new law highlights the serious implications of AI-generated content in compromising situations, reinforcing a commitment to protect individuals, particularly women and children, from exploitation and abuse. It is imperative that technology platforms and individuals alike are held accountable to prevent the misuse of such damaging content.